Fetching Website Metadata with a Ruby Gem

Saturday 05 April 2025

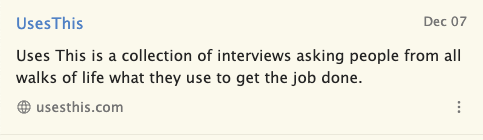

When users share links on Lettra, their post is rendered with a small footer including a generic link SVG followed by the website host.

This is what the very first iteration looked like:

Apps like BlueSky and iMessage have accustomed us to pretty previews, generated via a website’s OpenGraph (OG) metadata, creating a preview that looks like this:

I wanted something similar for links shared on Lettra. I’ve started with the favicon, adding a bit of color (and thus context) to shared links, without too much distracting metadata.

Previewbox

My first solution involved using the open source Previewbox Web Component from Marius Bongarts. This approach uses client-side JavaScript to make an AJAX request when rendering each link.

For modern websites with valid and up to date metadata, it generates great-looking previews.

The problem is when saving older sites. If Previewbox can’t find OG metadata, the component gets rendered as an empty block. While everything on the Lettra side works well, the frontend may confuse users into thinking something is broken. There isn’t an issue with these sites, they’re just older, and I wasn’t able to find a simple way to set fallback content for links where OpenGraph data failed to load.

Here’s an example using https://roadsideamerica.com:

I created some manual fallbacks for more popular hostnames like Facebook and Instagram, but this approach quickly becomes convoluted even when handling this logic in a helper separate from the View layer.

A second issue with Previewbox is that all data is fetched through an API. I’m not too keen on having these previews broken when I inadvertently meet an arbitrary request limit, so I opted instead for a backend solution.

Meta Inspector

There’s a Ruby gem called metainspector which serves this exact purpose. From its description:

MetaInspector lets you scrape a web page and get its links, images, texts, meta tags…

I gave this one a try on the front end at first and it was painfully slow. That’s to be expected, of course, since each link is fetched synchronously during rendering, triggering a new network request anywhere from 4ms up to 200ms.

It was obvious this rendering would have to be done server-side.

Updating Link Models in the Database

I’m hesitant to change database models needlessly, but for site metadata it makes sense since one request can pull all the necessary info. I opted for creating a callback right in the database model.

def set_favicon_url

Rails.logger.info "👾 set_favicon_url called for URL: #{url}"

page = MetaInspector.new(url)

self.favicon_url = page.images.favicon

rescue => e

Rails.logger.warn "⛔️ Could not fetch favicon for #{url}: #{e.message}"

end

The little 👾 emoji lets me quickly verify the callback was called successfully when reading the server logs.

First I added a new column to the link posts table for the favicon url, but I’ll update this to include one sole metadata object in a future update.

Before saving or updating a new link post, I’m fetching metadata (only using favicon at this moment) and updating the column’s content.

Then in the View layer I’m rendering the image tag for favicons unless the column value is nil, in which case I fallback to the 🔗 SVG I’d been using before.

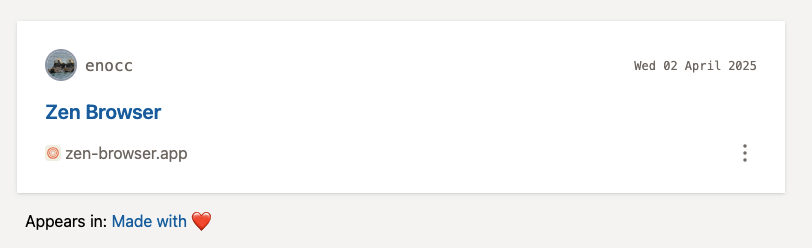

Here’s an example of a link partial rendered in Lettra:

This partial shows my saved link to the Zen web browser’s website. It shows the favicon down in the corner and a note indicating that this link appears in my “Made with ❤️” collection, which is for websites that have that message in their footer, just like most startups used to back in the 2010s.

So far it works pretty well. Fetching happens only when a new link post is added, and metadata is readily accessible anywhere the link is being used.

I’m pretty happy with this solution since I’ll be able to render link previews later on and I’ll have full control over each metadata element. Also not relying on an external API is pretty nice.

Updating Existing Links

For existing links, I had to update the production database. I made the callback method execute before both saving and updating records. Since there are a few links saved already, I ran this loop in the console:

LinkPost.find_each do |link_post|

link_post.update(favicon_url: link_post.favicon_url) if link_post.favicon_url.nil?

end

That populated all the favicons it found and cached them to memory. In following iterations I’ll be designing and populating a full preview like the one from BlueSky, but now I’ll have full control over how it looks and what it displays.

Should be pretty easy now that I spent the past week porting all styles to TailwindCSS.